Landing With Trust

Designing for the future of commercial drone deliveries in urban public spaces

This project investigates how drones can safely complete deliveries in public parks without relying on fixed infrastructure. The focus is on a critical moment: when the drone, unsure about the safety of a landing spot, engages the recipient for guidance.

Course

Final Batchelor Project

Main Deliverables

AR drone simulation (research setup), drone design, project poster, research-based design guidance

Expertise areas

User & Society, Technology & Realization, Maths Data & Computing, Creativity & Aesthetics, Business & Enterpreneurship

Date

11/06/2025

Using visual and audio cues—supported by an AR interface—the drone initiates a brief interaction and waits for a simple gesture, such as a thumbs-up to confirm the landing zone or a pointing gesture to suggest a new one. This human-in-the-loop mechanism helps resolve uncertainty in real time while maintaining user trust and safety.

The final drone redesign integrates six targeted features (F1–F6), each selected to address specific human-drone interaction (HDI) challenges surfaced in the literature and operational limitations identified in leading platforms. The topics include: Visual Comprehensibility and Form Factor, Multimodal State Signalling, Acoustic and Physical Safety, Terrain Adaptability and Delivery Handoff

To evaluate the concept, I developed an open-source Unity-based interactive AR simulation. It includes gesture recognition, cue handling, and drone behavior modelled with modular state machines—designed to be generalizable and extensible for future HDI research. The link is available at the bottom of this page. Three scenarios were selected for empirical evaluation for significant steps in user involvement: S1, S3, S5 turned into conditions C0, C1 & C3

Simillarly, To present realistic interactions, a 10x10-meter park environment was created using Blender 4.2 To evaluate various cues and prompts, either with images or animations. The scene included a park setting with a bench and two human figures: recipient, bystadner and centrally positioned redesigned matternet drone with a dedicated HMI. Camera angles have been positioned at eye level height of both users (first-person perspective) and around the area (3rd PP). This setup was created with the use of royalty-free assets and the Metternet redesign as a tool for creating visuals for questionnaires related to cue effectiveness.

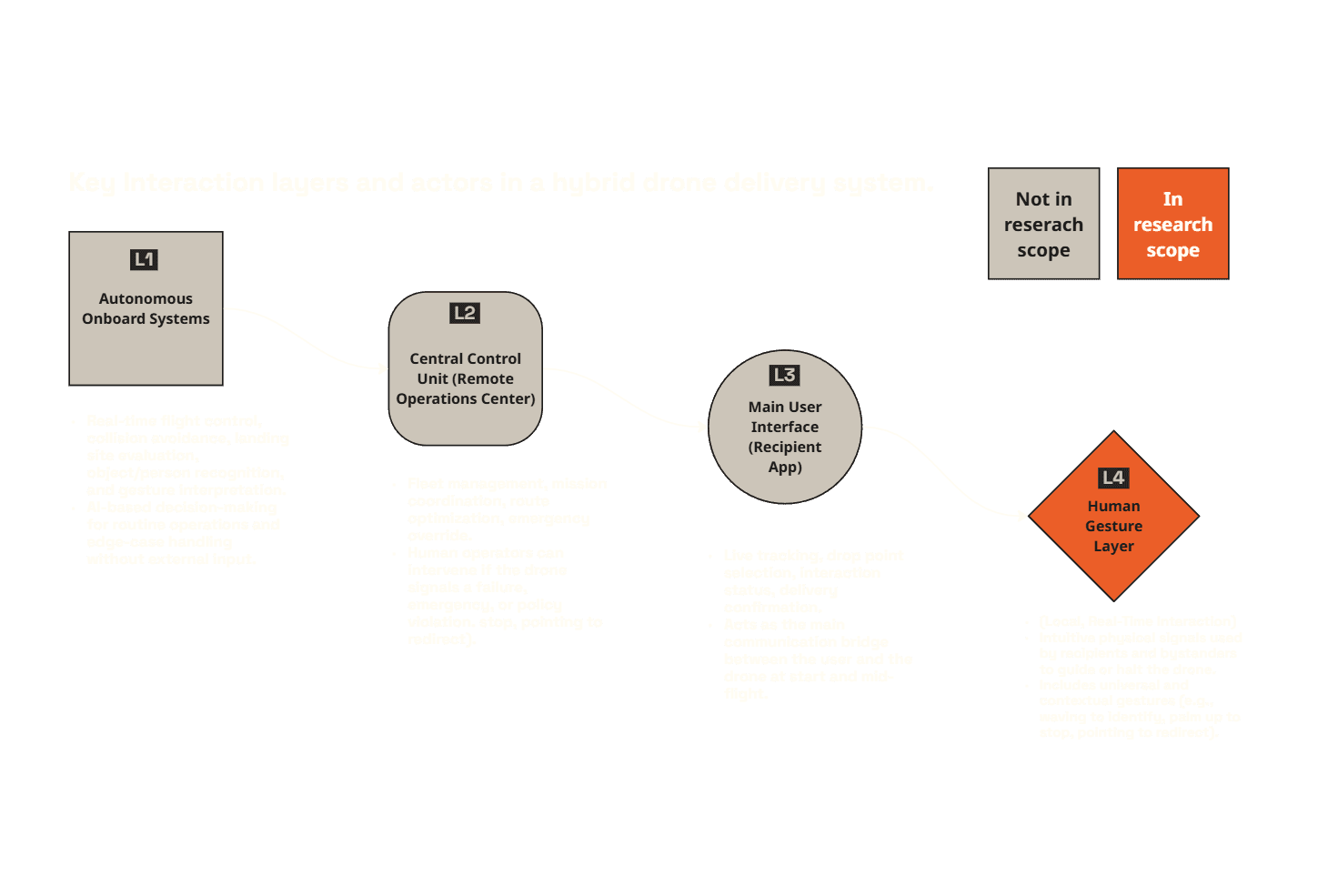

The project touches many layers of the system design to ground the proposed solutions in a holistic concept, as well as uncover and make explicit its underlying assumptions. To ensure safety and reliability, the drone is proposed to operate as part of a multi-layered control architecture, where different actors engage at various points depending on proximity and system status.

Overall, our findings suggest that lightly engaging users, through a single confirm/redirect gesture—paired with clear, multimodal state announcements- can strike the ideal balance between autonomy and human oversight in drone landings. Allowing recipients to co-select landing spots further enhances their sense of safety, while transparent explanations of aborts bolster system reliability. However, these results arise from a small (n=12), within-subjects AR prototype study subject to potential fatigue, contrast effects, and environmental variability. Before generalizing to real-world deployments, future work must replicate these insights in larger, more diverse samples, introduce bystander viewpoints, and explore both VR and AR testbeds under controlled and public conditions. By cautiously iterating on these co-designed cues and testing across richer contexts, we can refine human–drone interfaces that are both trustworthy and practical.